Course Design By

Nasscom & Wipro

Understand how the Databricks Lake House integrates the best of data lakes and warehouses.

Learn how to perform ETL operations (Extract, Transform, Load) with Spark and SQL/Python.

Construct data pipelines that can process large volumes of data.

Understand data security and quality to secure your data and keep it clean.

Prepare to pass official Databricks certification exams.

In India: Freshers can earn between 6 to 10 LPA.

In the US: Freshers earn about $84,000 per year.

Junior Data Engineer Start by helping build and manage data pipelines.

Data Analyst Work on reports and business insights.

Machine Learning Engineer Train and use AI models.

Senior Data Engineer Lead data projects and guide a team.

Data Architect Plan and build entire data systems.

Big Demand: Many companies need Databricks experts.

Practical Learning: You learn by doing real projects.

Certifications: You prepare for industry-level certifications.

Used Worldwide: Databricks is used in tech, banking, healthcare, and more.

Data Engineer Builds systems to move and clean data.

Data Analyst Studies data to give useful business info.

Machine Learning Engineer Creates and uses AI models.

Data Scientist Works with complex data and makes predictions.

Data Architect Designs how data should flow and be stored.

Use Apache Spark to process data fast.

Create pipelines to move and clean data.

Work in teams with engineers, analysts, and developers.

Keep data systems running smoothly and securely.

Tech Companies Like Microsoft, Amazon, Google.

Banks & Finance To study data for business decisions.

Healthcare For managing patient data and reports.

Retail To track sales and inventory trends.

Consulting Firms That help other companies manage data.

Certified Spark Developer For Spark programming.

Certified Data Engineer Associate For building data pipelines.

Certified Machine Learning Associate For ML-related work.

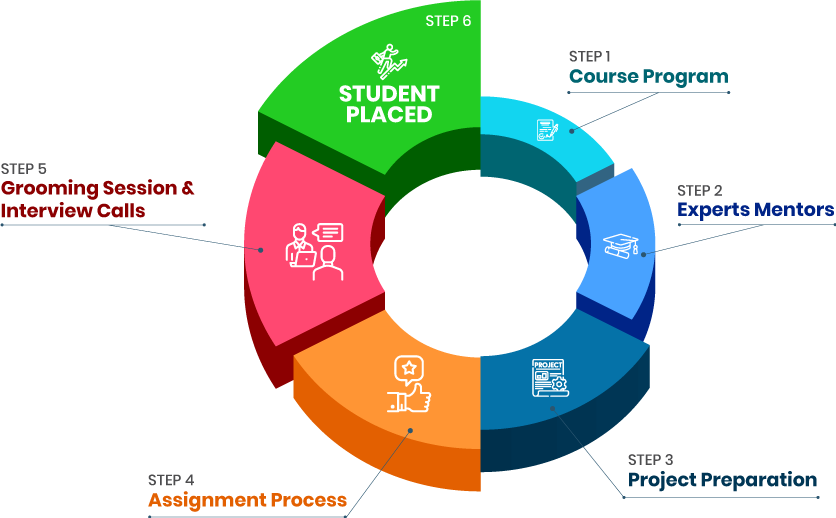

Resume writing and profile building.

Practice interviews and mock tests.

Access to our network of hiring partners.

One-on-one mentoring sessions.

Job alerts and interview scheduling help.

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrad

career upgrad

career upgrad

career upgrad

14-Jun-2025*

16-Jun-2025*

18-Jun-2025*

14-Jun-2025*

16-Jun-2025*

18-Jun-2025*

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Yes, Databricks Certification Training is beginner-friendly and teaches everything from the basics.

Basic Python or SQL helps, but we’ll cover what you need during training.

Yes, You will get a completion certificate. You can also take official Databricks certification exams.

Yes, the course is focused on hands-on training with real data projects.

Yes, we offer full placement support including interview prep and job leads.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942